Siri isn’t exactly the smartest kid on the block. For years, Apple’s intelligence system has lagged behind competitors like Google and Amazon, with the company blankly refusing to sacrifice its customers’ data. While it has helped the company score some brownie points, it has come at the cost of a truly useful AI.

Google’s smarts have been something I’ve marvelled at for years, and I’ve always wondered if Siri could ever catch up. With WWDC 21, Apple proved that it could, with some truly amazing features that show you don’t need massive amounts of data to create a functional AI. Apple isn’t competing with Google just yet, but it got pretty close in some areas, which is actually quite impressive. All the processing happens on-device, which is pretty impressive, but comes with its own pitfalls as I’ll highlight later.

Of course, not all these on-device intelligence features are bound to be useful, but there’s one in particular that has a lot of people talking – Live Text. This is going to be one of those things that fundamentally changes the way we use our iPhones, much like Siri did when it was first introduced. But beyond Live Text, there are a few more neat features Apple has baked into its latest operating systems. Here’s a look at all the smarts your iPhone, iPad and Mac are getting in 2021.

Live Text

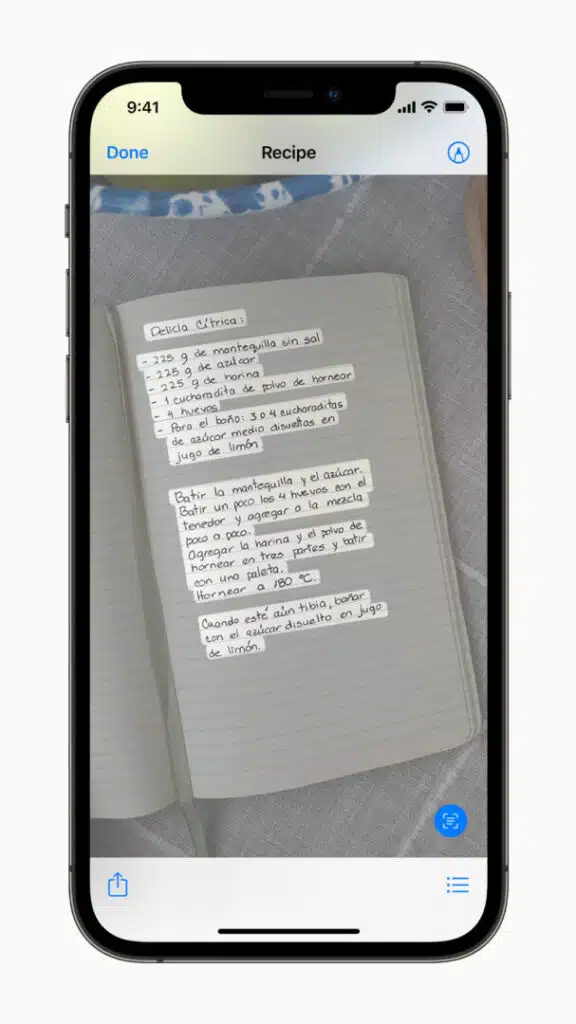

The biggest, and dare I say best update to come out of WWDC has to be Live Text. It’s intuitive, and works really well according to early testers. The idea is simple – the feature can instantly pick up any text around you, allowing you to translate or copy in real-time. It works straight from the camera app, or from existing photos in your library. Think of it as optical character recognition (OCR) with the intelligence of AI.

This is something I’ve been wanting for a very long time. There are often so many numbers, email IDs, or even locations I see that I take a quick snap of, only to struggle and manually input later. Live Text is bound to make it seamless. Another advantage is that it works with Apple’s Translate app as well, but this isn’t a lot to boast about. At launch, Live Text will only work with English, Chinese (both simplified and traditional), French, Italian, German, Spanish, and Portuguese. That’s actually very disappointing, especially for users outside the US.

Translate also supports Arabic, Japanese, Korean, and Russian so it’s unclear why Apple can’t extend Live Text to these languages, at least with online intelligence processing. Even then, it’s a pitiful number of languages compared to Google Translate. The only advantage Apple has here is that Translate is baked into the UI, so you can access it from anywhere. But the limited language support means that most of us will have to copy and paste text into Google Translate.

But that said, Live Text is on its own, a very powerful feature. Even if you don’t use translate, you can still take advantage of this to quickly copy important text from visuals all around you. Early testers got it to work with billboards, papers, and even from product boxes. That’s actually pretty impressive, even more so when you consider it is all happening on-device. Now that is intelligence at its finest!

Visual Look Up

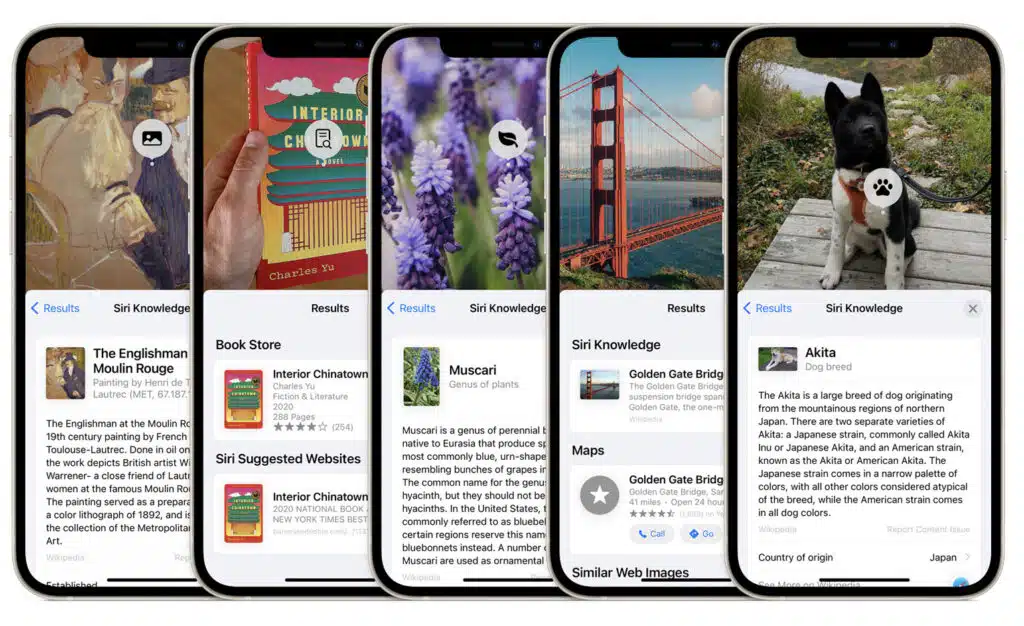

In addition to Live Text, Apple has introduced Visual Look Up, which will allow users to gather information on objects, books, landmarks, nature, and art from photos. The company didn’t go into great detail about this at WWDC, and that’s because I suspect it’s not actually as comprehensive, or efficient as Live Text.

The feature is an exact replica of Google Lens – Apple’s AI can scan an image, or real-time data from the Camera to tell you more about what you are seeing. You simply tap on an object, say a cat, and Apple will pull data about what breed of cat that is. Similarly, it can tell you more about a landmark like the Statue of Liberty. Early tests of the feature were mixed at best, and I fear this isn’t going to be as groundbreaking as some might expect.

Apple claims Visual Look Up is processed on-device at WWDC, but to surface results you’ll still need an internet connection. For another thing, Apple’s search capabilities are average at best, as compared to Google. I extensively use Spotlight and Siri to search for information, and more often than not, I find myself moving to the Google app simply because the results are underwhelming, and hard to read. Google has spent decades at this game, and is clearly the superior alternative. Visual Look Up clearly needs a lot more refinement, and effort from Apple to collate data from the web. For now, I think it’s going to be a gimmick, much like Force Touch on iOS.

There’s a clear use-case for this tech, as Google has demonstrated, but Apple just isn’t in the same league. If you live in a city like New York, London, or San Francisco, the feature may work better since Apple Maps has mapped those locations out extensively, but otherwise I don’t think you’ll find a use case for it. Maybe to identify cat and dog breeds, but I’d still trust Google Lens more for that.

On-device intelligence will manage notifications

As expected, Apple updated its notifications in iOS 15, and I have to admit I am not too excited about this. While it promises a lot, there remain plenty of questions about how well it works. With iOS 15, Apple has introduced a ‘notifications summary’ that will display only your most important notifications. All others (Apple calls non-time critical) will be delivered at a more “opportune time”.

It appears as if Apple won’t pick an “opportune time” to deliver these notifications, you can set it yourself. While that’s fantastic, it seems to be a pretty rigid schedule. I don’t see how that’s a bad thing for most people, but I guess some people will be annoyed they don’t have finer control. This is a pretty useful feature, if you often find yourself bombarded with notifications at inconvenient times, but there’s one thing I am particularly doubtful about – priority.

Apple says iOS 15 will arrange notifications by priority, “with the most relevant notifications rising to the top, and based on a user’s interactions with apps.” On paper that sounds great, but it does throw up some curveballs in practice. How does the system know what notifications are relevant? For example, I frequently use the ESPNCricinfo app to keep track of cricket games. I always want notifications for game updates, but not always for news stories. Will iOS be able to recognise this and mute only the news notifications for later? Somehow I doubt that.

One more example could be food delivery apps. Of course I want Uber Eats to notify me when my delivery is on the way, but I really don’t care for the offers updates it throws up from time to time. While I like the visual overhaul, I really doubt if Apple’s AI is up to the task of picking out these minute differences. I guess we will have to see.

Other areas intelligence will work

Two other areas that are getting better AI smarts are Photos and Spotlight. Photos I get, and it makes sense even if it’s not something everyone uses. Apple claims its on-device intelligence can now pick an apt song from Apple Music for your Memories. Personalized music on Apple Music has been relatively impressive for me, so I am inclined to give Apple the benefit of the doubt here. If you do love the curated memories, this feature will be delightful for sure. And I am glad Apple is tapping into Music, the other music options were ok, but could have definitely been improved.

Spotlight is another area I am a bit concerned about. As I mentioned before, I’ve tried to use the feature extensively for web searching in the past, but it just isn’t as useful as the Google app. The visual overhaul is definitely very welcome and appreciated, but I am not sure if it will live up to Apple’s promise. For on-device content like messages, emails, photos, and documents I am sure it will be fantastic, but web browsing is still a big question mark. This is just another one of those things I’ll have to text extensively before I come to a conclusion.

Finally, and perhaps most importantly, Siri is going offline in 2021. Thanks to on-device intelligence, Siri can now process basic commands like opening apps, settings, and timers without the need for an internet connection. This is huge, and without a doubt one of the most useful areas Apple has expanded on-device intelligence. It’s not just about working offline, but because Siri doesn’t need an internet connection to process these requests, it’s blazingly fast. Early beta videos show near-instant processing of these requests, which is no doubt going to really benefit heavy Siri users.

I think a lot of my concerns stem from two key areas – Apple’s AI abilities, and the processor’s abilities. iOS 15 will work with the iPhone 6s and above, which is fantastic, but I really doubt if the 6s or 7 has the capability to run all these on-device processes. The Verge reported that Live Text, Portrait mode in FaceTime, and immersive walking directions in Apple Maps won’t work with devices older than the iPhone XS. I’m inclined to believe older devices will also struggle with Visual Look Up, notifications, and offline Siri. I have an iPhone 7, and although it is in working condition, it’s just not as fast or smooth on the latest OS. There’s plenty of time between now and September, so I hope Apple proves me wrong.